安装(queen版,下一篇讲用kolla-ansible来安装)

环境

1 | 192.168.40.150 computer01 centos7 4.4.197 |

1、配置主机名的映射(在/etc/hosts文件中添加相应的主机和ip映射)

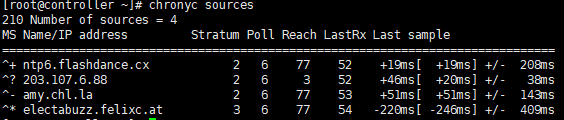

2、配置时间同步,控制节点为ntp server

1 | 所有节点:yum install chrony -y |

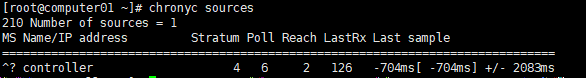

测试:

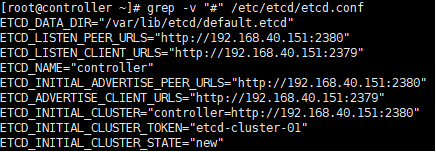

在controller节点上:

其他节点上:

3、安装OpenStack包(所有节点)

1 | yum install centos-release-openstack-queens -y |

4、安装数据库(controller)

1 | yum install mariadb mariadb-server python2-PyMySQL -y |

5、安装rabbitMQ(controller)

1 | yum install rabbitmq-server -y |

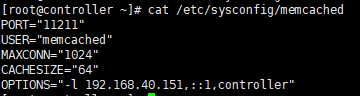

6、安装数据库缓存(controller)

1 | yum install memcached python-memcached -y |

注:(options,后面不加controller)

1 | systemctl enable memcached.service |

7、安装etcd(controller)

8、安装keystone(controller)

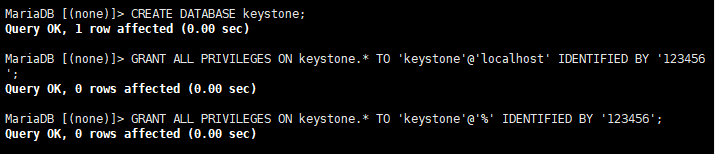

- 创建keystone数据库并授权

- 安装、配置

1 | yum install openstack-keystone httpd mod_wsgi -y |

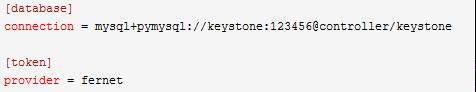

编辑 /etc/keystone/keystone.conf 文件:

- 同步keystone数据库

1 | /bin/sh -c "keystone-manage db_sync" keystone |

- 数据库初始化

1 | keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone |

- 引导身份认证

1 | keystone-manage bootstrap --bootstrap-password 123456 --bootstrap-admin-url http://controller:35357/v3/ --bootstrap-internal-url http://controller:5000/v3/ --bootstrap-public-url http://controller:5000/v3/ --bootstrap-region-id RegionOne |

9、配置http服务

- 编辑 /etc/httpd/conf/httpd.conf 文件,配置 ServerName 参数

1 | ServerName controller |

- /usr/share/keystone/wsgi-keystone.conf 链接文件

1 | ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/ |

- 启动(如果报错,可关闭selinux)

1 | systemctl enable httpd.service |

- 配置administrator账号

1 | export OS_USERNAME=admin |

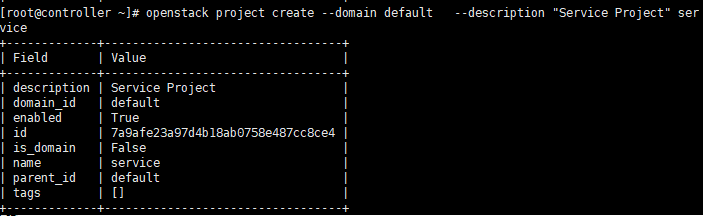

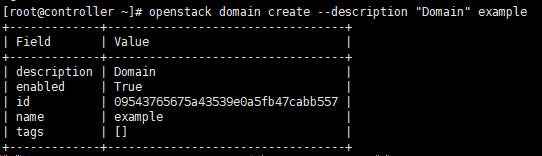

10、创建domain,projects,users,roles

- 创建域:

1 | openstack domain create --description "Domain" example |

- 创建服务项目

1 | openstack project create --domain default --description "Service Project" service |

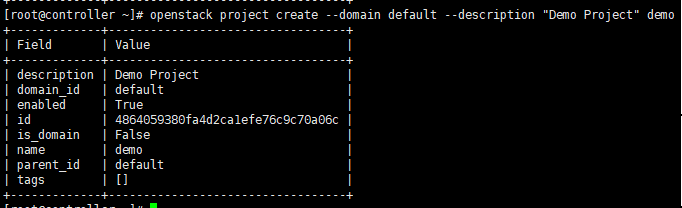

- 创建平台demo项目

1 | openstack project create --domain default --description "Demo Project" demo |

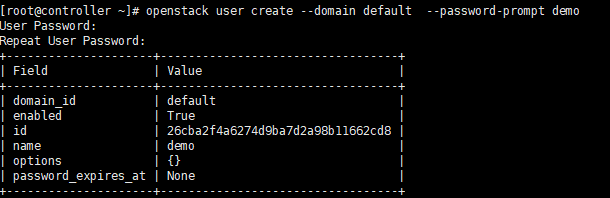

- 创建demo用户

1 | openstack user create --domain default --password-prompt demo |

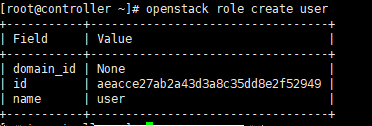

- 创建用户角色

1 | openstack role create user |

- 添加用户角色到demo项目和用户

1 | openstack role add --project demo --user demo user |

11、检查配置

- 取消环境变量

1 | unset OS_AUTH_URL OS_PASSWORD |

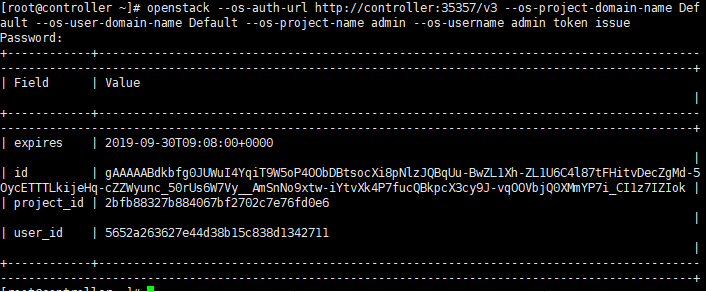

- 使用admin申请token

1 | openstack --os-auth-url http://controller:35357/v3 --os-project-domain-name Default --os-user-domain-name Default --os-project-name admin --os-username admin token issue |

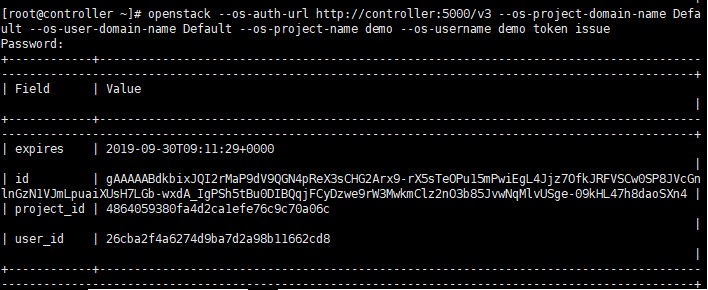

- 使用demo申请token

1 | openstack --os-auth-url http://controller:5000/v3 --os-project-domain-name Default --os-user-domain-name Default --os-project-name demo --os-username demo token issue |

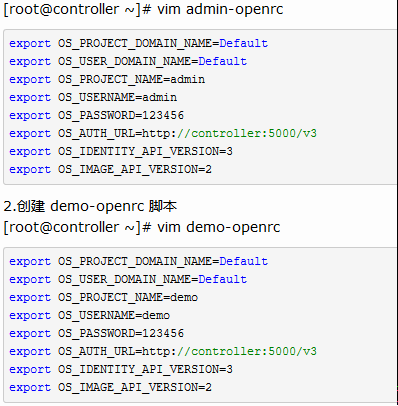

- 通过脚本来生成token,为了提高可操作性和工作效率,可以创建一个统一而完整的openRC文件,其包括通用变量和特殊变量

1 | source admin-openrc |

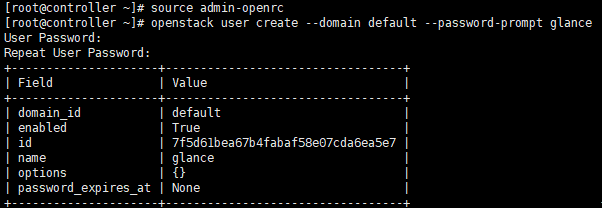

12、安装glance服务(controller)

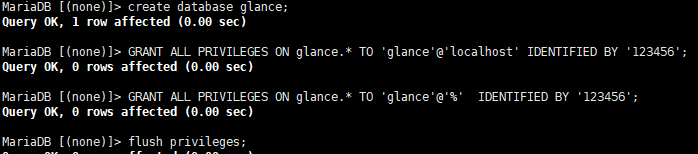

- 创建glance数据库,并授权

- 获取admin用户的环境变量,并创建服务认证

1 | source admin-openrc |

- 将admin添加到glance的项目和用户中

1 | openstack role add --project service --user glance admin |

- 创建glance项目

1 | openstack service create --name glance --description "OpenStack Image" image |

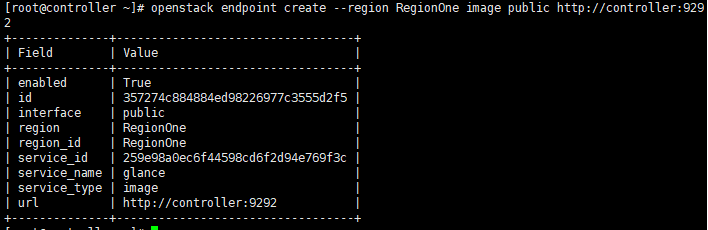

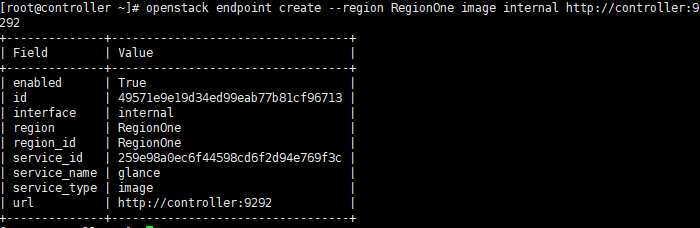

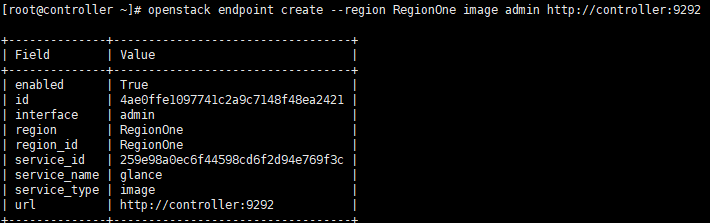

- 创建glance镜像服务的API端点

1 | openstack endpoint create --region RegionOne image public http://controller:9292 |

1 | openstack endpoint create --region RegionOne image internal http://controller:9292 |

1 | openstack endpoint create --region RegionOne image admin http://controller:9292 |

13、安装和配置glance组件

1 | yum install openstack-glance -y |

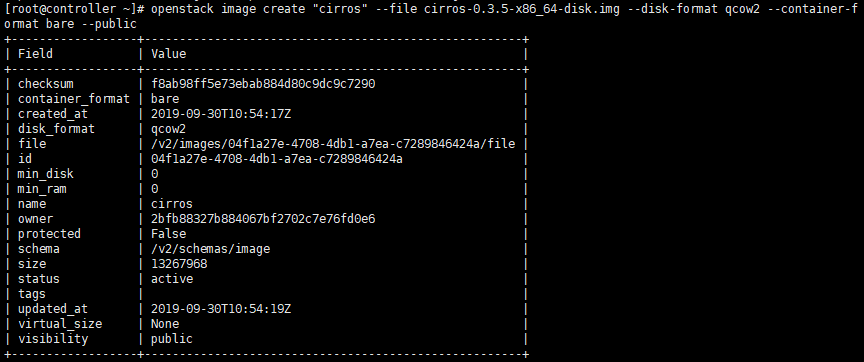

14、验证:

- 获取admin用户的环境变量,且下载镜像:

1 | source admin-openrc |

查看:

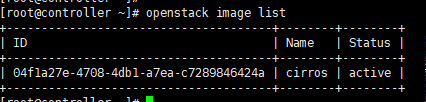

15、安装配置computer(controller)

- 创建nova_api,nova,nova_cell0数据库

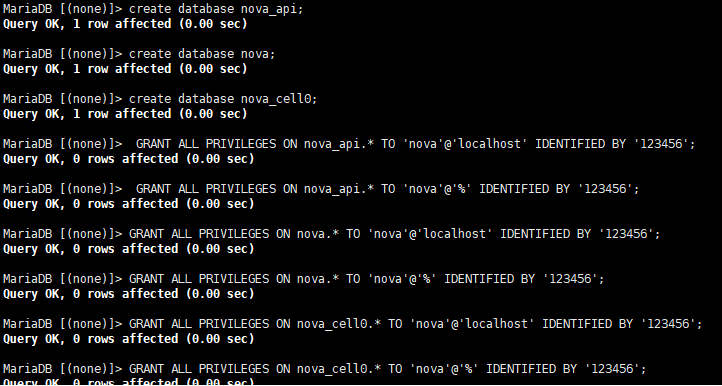

- 创建nova用户

1 | source admin-openrc |

- 添加admin角色赋给项目和用户

1 | openstack role add --project service --user nova admin |

- 创建nova计算服务

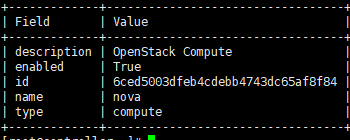

1 | openstack service create --name nova --description "OpenStack Compute" compute |

- 创建API服务端点

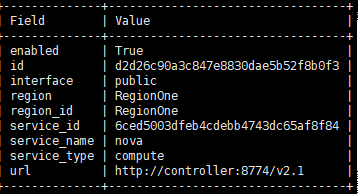

1 | openstack endpoint create --region RegionOne compute public http://controller:8774/v2.1 |

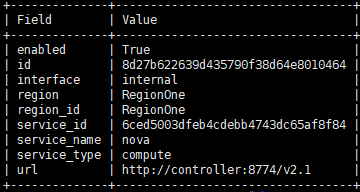

1 | openstack endpoint create --region RegionOne compute internal http://controller:8774/v2.1 |

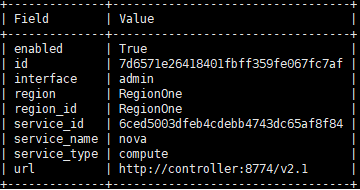

1 | openstack endpoint create --region RegionOne compute admin http://controller:8774/v2.1 |

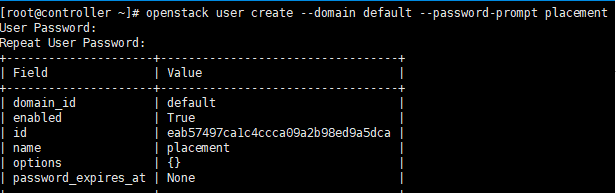

- 创建placement服务用户

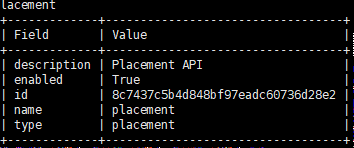

1 | openstack service create --name placement --description "Placement API" placement |

1 | openstack endpoint create --region RegionOne placement public http://controller:8778 |

16、安装和配置nova组件

1 | yum install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-placement-api |

验证:

重启:

1 | systemctl enable openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service |

17、安装配置compute(computer节点)

1 | yum install openstack-nova-compute |

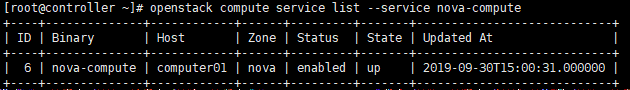

- 确认nova计算服务组件已经成功运行和注册:

1 | controller: |

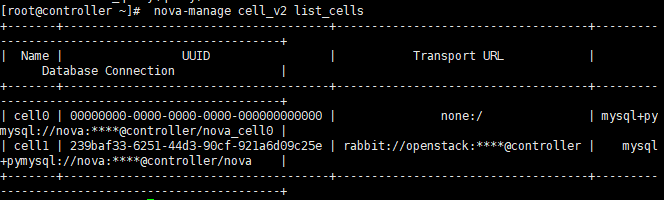

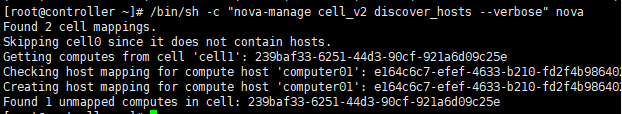

- 发现计算节点:

1 | /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova |

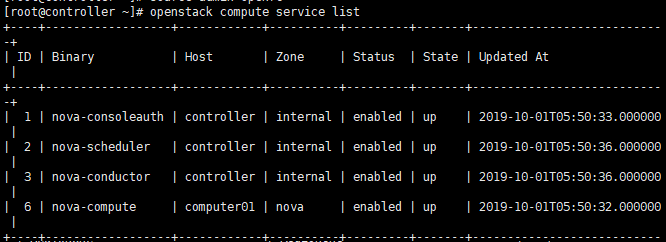

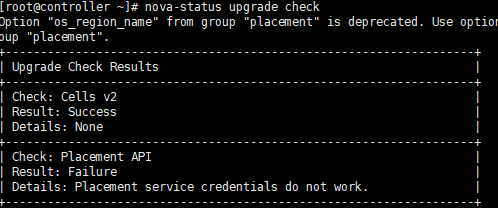

18、在controller上验证计算服务操作

- 列出服务组件

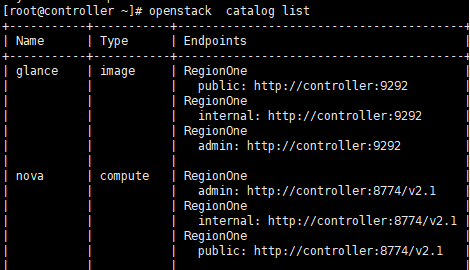

- 列出身份服务中的API端点与身份服务的连接

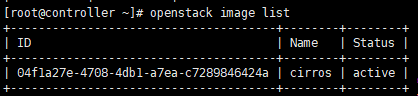

- 列出镜像

- 检查cells和placement API

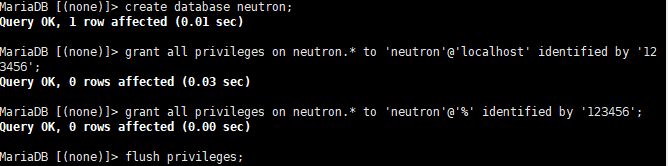

19、安装和配置网络组件(controller)

- 创建数据库以及授权

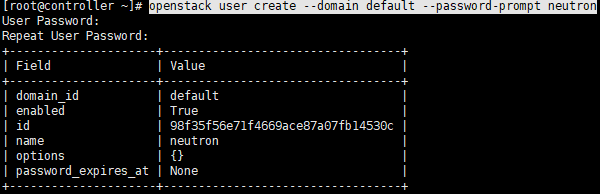

- 创建用户以及服务

1 | openstack user create --domain default --password-prompt neutron |

- 将admin赋给neutron和service

1 | openstack role add --project service --user neutron admin |

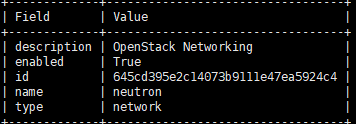

- 创建service entity

1 | openstack service create --name neutron --description "OpenStack Networking" network |

- 创建网络服务API 端点

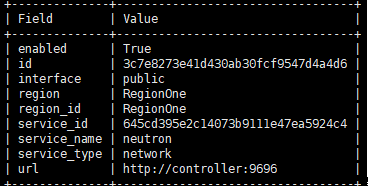

1 | openstack endpoint create --region RegionOne network public http://controller:9696 |

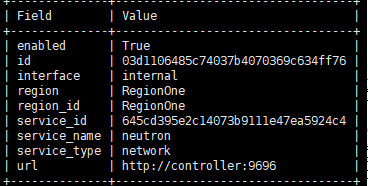

1 | openstack endpoint create --region RegionOne network internal http://controller:9696 |

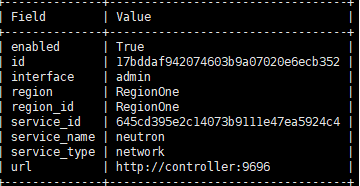

1 | openstack endpoint create --region RegionOne network admin http://controller:9696 |

20、配置网络部分(controller)

1 | yum install openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables |

21、配置网络二层插件(controller)

1 | 编辑 /etc/neutron/plugins/ml2/ml2_conf.ini 文件: |

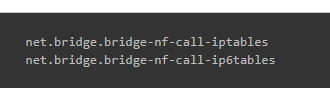

将其设置为1

1 | 配置 DHCP 服务编辑 /etc/neutron/dhcp_agent.ini 文件: |

22、配置compute节点网络服务

1 | yum install openstack-neutron-linuxbridge ebtables ipset |

23、安装horizon组件(controller)

1 | yum install openstack-dashboard -y |

- 前端访问:

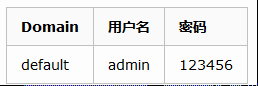

访问 http://192.168.40151/dashborad 查看 openstack 的 web 页面:

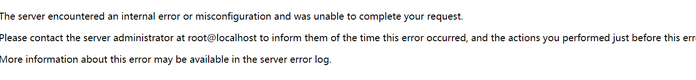

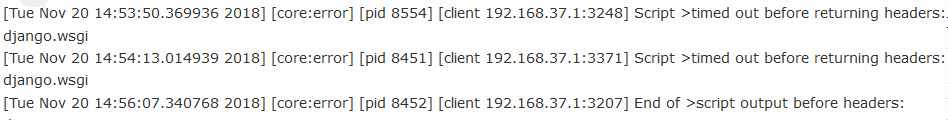

如果报错:

在WSGISocketPrefix run/wsgi 下添加:

**WSGIApplicationGroup %{GLOBAL}**然后重启

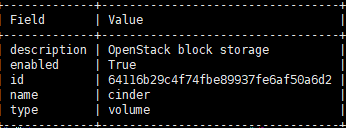

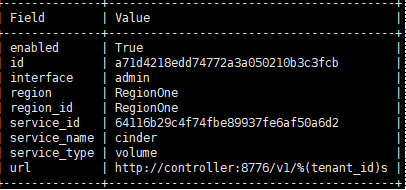

24、安装、配置cinder

- 在controller节点上,配置数据库,创建cinder数据库、和前面一样,创建授权用户

- 加载admin-user环境变量,并创建identity服务凭据(123456):

1 | source admin-openrc |

1 | 将admin role赋予cinder用户和service project |

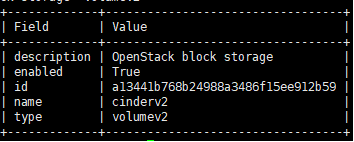

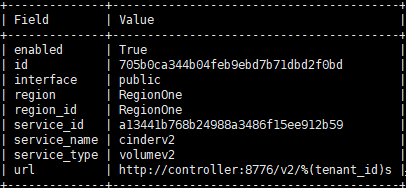

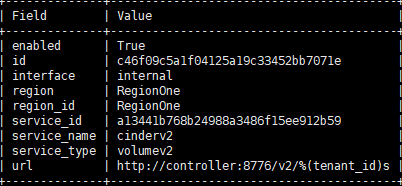

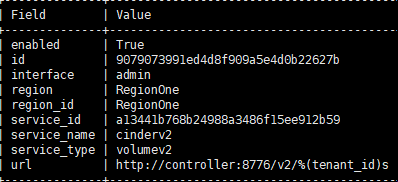

1 | openstack service create --name cinderv2 --description "OpenStack block storage" volumev2 |

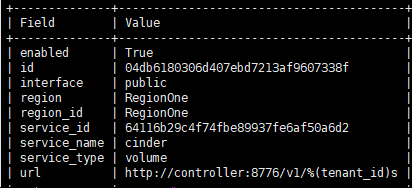

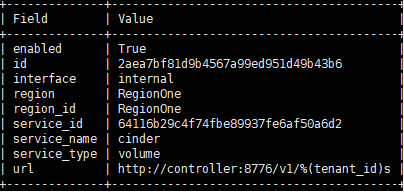

1 | 创建API endpoint |

1 | openstack endpoint create --region RegionOne volume internal http://controller:8776/v1/%\(tenant_id\)s |

1 | openstack endpoint create --region RegionOne volume admin http://controller:8776/v1/%\(tenant_id\)s |

1 | openstack endpoint create --region RegionOne volumev2 public http://controller:8776/v2/%\(tenant_id\)s |

1 | openstack endpoint create --region RegionOne volumev2 internal http://controller:8776/v2/%\(tenant_id\)s |

1 | openstack endpoint create --region RegionOne volumev2 admin http://controller:8776/v2/%\(tenant_id\)s |

安装和配置cinder组件

1 | yum install -y openstack-cinder |

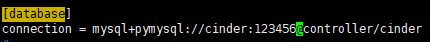

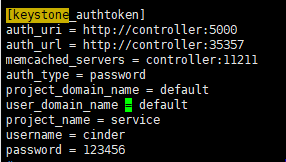

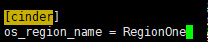

编辑/etc/cinder.conf文件:

配置数据库连接:

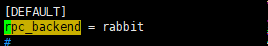

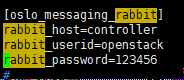

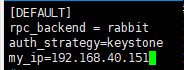

配置rabbitmq:

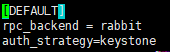

配置认证服务:

配置节点管理IP地址:

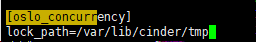

配置锁路径:

将cinder服务信息同步到数据库(忽略输出中不推荐的信息):

1 | /bin/sh -c "cinder-manage db sync" cinder |

编辑nova.conf:

服务启动:

1 | systemctl restart openstack-nova-api |

25、安装和配置存储节点(cinder)

- 安装并启动lvm2

- 创建逻辑卷和卷组

1 | pvcreate /dev/sdb |

yum install openstack-cinder targetcli python-keystone -y

1 | * 编辑配置文件: |

systemctl enable openstack-cinder-volume.service target.service

systemctl start openstack-cinder-volume.service target.service

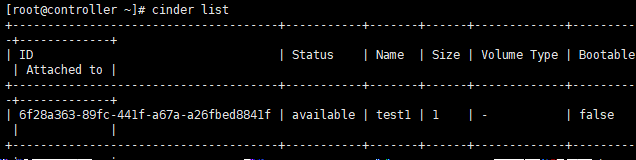

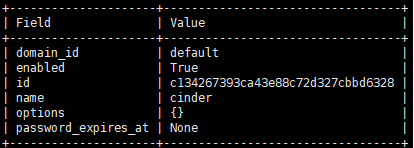

1 | * 验证: |

export OS_VOLUME_API_VERSION=2

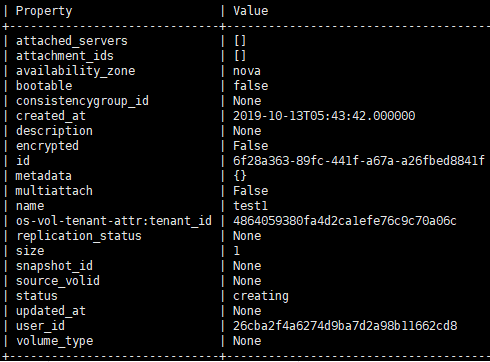

1 | 加载demo脚本并创建一个1G的卷: |

source demo-openrc

cinder create --name test1 1

然后查看所创建的卷: